Programs Used: Python, Keras, and Pandas

Motivation: As the number of images being taken to survey surrounding galaxies increases the need for classifying particular galaxies increases as well. Currently many galaxies are classified by crowdsourcing volunteers and having them identify certain shapes and morphologies by hand. Though this approach has worked in the past it becomes less feasible as the number of images moves into the millions and millions. This project looks at solving this problem through the use of Convolutional Neural Networks in order classify these images in a way that is more scalable to massive data sets.

Introduction: The objective of this project is to create a model that can classify galaxies as accurately as possible in a way that is scalable to more massive data sets. The data set being used for this project is publicly available at: www.kaggle.com/c/galaxy-zoo-the-galaxy-challenge

While information regarding the dataset can be found at:

https://arxiv.org/abs/1308.3496

Performance for the model was measured based off of how closely the models outputs matched the given classifications.

Dataset: The data being used to train a classifier consists of 60000 412×412 RGB images. The images are labelled with the percentage voter share from a survey used to classify these galaxies through volunteers.

Convolutional Neural Networks: Competitions for classifying images played a large part in the big boom of interest in Convolutional Neural Networks and deep network architectures. One of the most instructive examples of how much Convolutional Neural Networks changed image recognition is their success on the ImageNet Challenge. In 2011 a “good” image classifier could achieve an error rate of around on 25% on this challenge [1]. In 2012 a deep CNN redefined the definition of an effective image classifier by achieving an error rate of 16%. This triggered a boom of research into deep CNN architectures with modern architectures able to achieve error rates less than 5% [1].

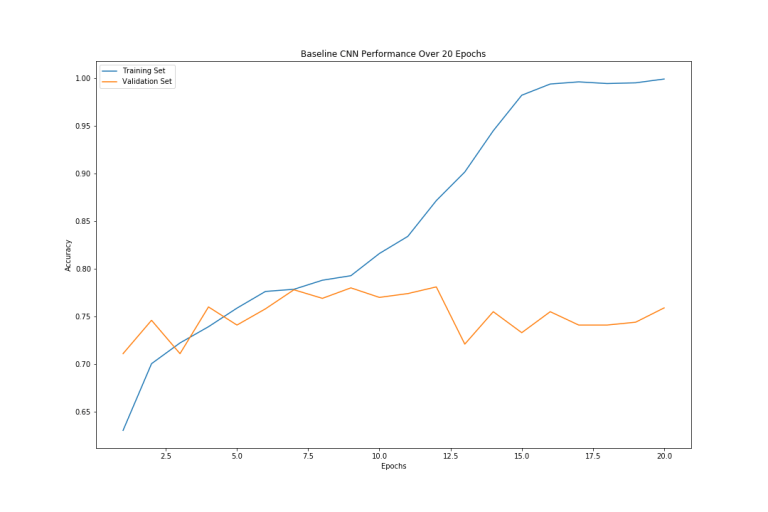

Baseline Model: In order to determine how effective the model solutions are at classifying galaxies a “simple” five layer convolutional network was created (Three Convolutional Layers followed by two dense layers) for later comparison. The model was trained on 5000 images in order to determine how effective a relatively simple network could perform on this problem. The results for this “simple” network are shown below:

Starting at around 7 passes through the data the simple CNN model begins to over fit the data peaking at a validation set accuracy of 78%. The plot of the loss function reinforced this trend showing a divergence between the training set loss and the test set loss around the same point.

Starting at around 7 passes through the data the simple CNN model begins to over fit the data peaking at a validation set accuracy of 78%. The plot of the loss function reinforced this trend showing a divergence between the training set loss and the test set loss around the same point.

Model Architecture:

Input: To reduce the number of parameters needed in the model images were cropped to 169 x 169 x 1 before being passed into the model. A sample 169 x 169 image can be viewed below:

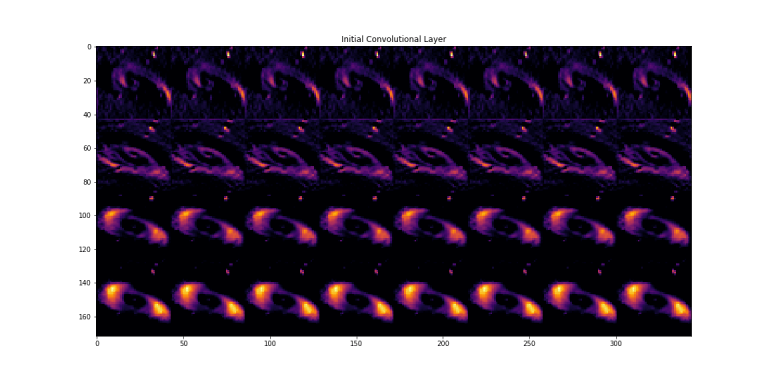

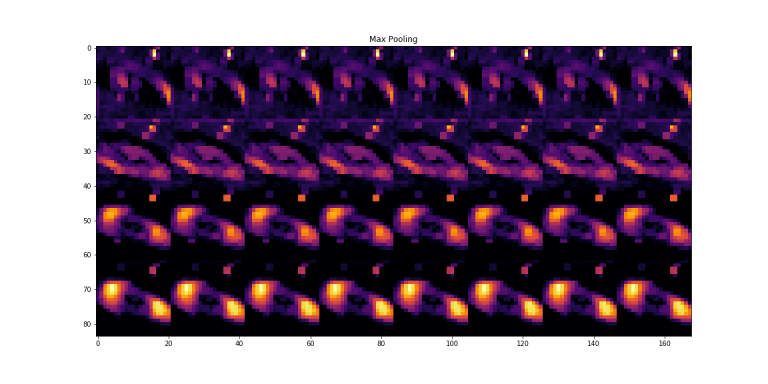

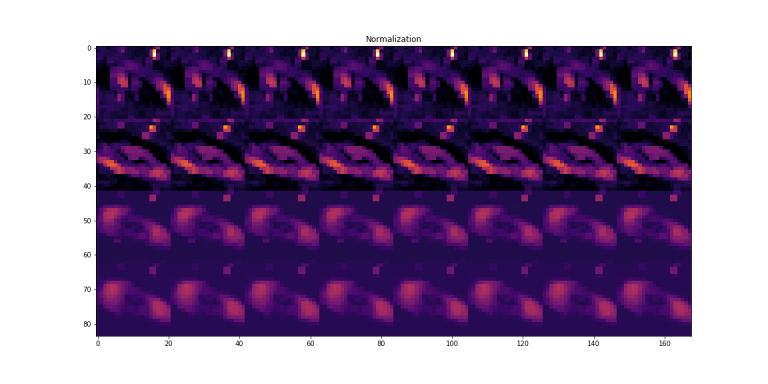

First Layer: The models architecture draws largely from the architecture of AlexNet, but with a greatly reduced number of parameters and no parallelized layers[2]. The first layer is a convolutional layer with 32 filters of window size 11 x 11 taking 4 x 4 strides. The outputs of this layer were activated using rectified linear units (all layers with the exception of the final output layer were also activated in this manner) then pooled and batch normalized before being fed into the second convolutional layer.

This first layer serves as a form of feature detection. The activations of this first layer are visualized below to show the output that will be fed into the second layer. These images show how the model is able to pick out such features as the spiral structure of galaxies.

Second Layer: The second layer of the model is another convolutional later with 64 filters of size 5 x 5 taking single strides. The outputs are again pooled and normalized in the same manner described in the first layer before being passed into the third convolutional layer.

Third, Fourth and Fifth Layers: The third, fourth and fifth layers are all convolutional layers of the same structure. They are composed of 128 3 x 3 filters whose outputs are fed directly into the next sequential layer. The outputs of the final convolutional layer are flattened to a 1D array before being passed to the sixth layer.

Sixth and Seventh Layers: The sixth and seventh layers are dense layers composed of 512 units. A dropout filter of 0.5 is applied to the outputs of both of these layers as a means to reduce overfitting.

Eighth Layer: The final layer of the model is set to the number of possible outputs of the particular survey question. For the first survey question there are three possible answers so a model for question one has three dense units. This output is then transformed by a softmax activation function to produce the models prediction of each image.

Sample Keras Code:

model = Sequential() model.add(Conv2D(32,(11,11),padding='same',activation='relu',strides=(4,4),input_shape=input_shape)) model.add(MaxPooling2D((3, 3),strides = (2,2))) model.add(BatchNormalization()) model.add(Conv2D(64, (5, 5),activation='relu')) model.add(MaxPooling2D((3, 3),strides = (2,2))) model.add(BatchNormalization()) model.add(Conv2D(128, (3, 3),activation='relu')) model.add(Conv2D(128, (3, 3),activation='relu')) model.add(Conv2D(128, (3, 3),activation='relu')) model.add(Flatten()) model.add(Dense(512, activation='relu')) model.add(Dropout(0.5)) model.add(Dense(512, activation='relu')) model.add(Dropout(0.5)) model.add(Dense(num_classes, activation='softmax'))

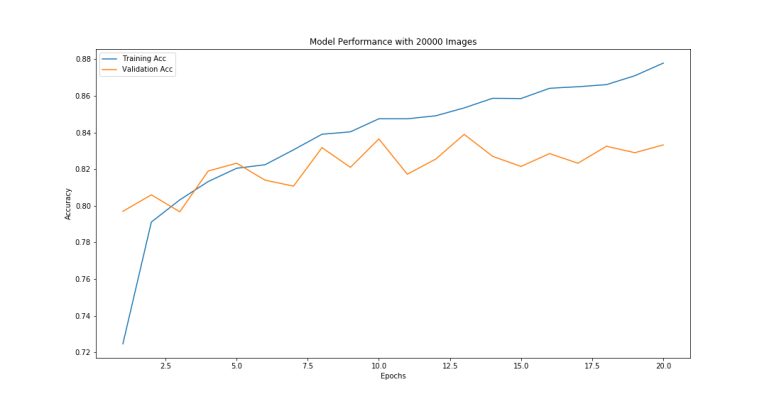

Model Performance: Overall this model was able to far outperform the baseline network. The highest accuracy reached by the model was 84%, an accuracy 7% higher than anything achieved by the baseline. It also stayed far more consistent across each pass through the data maintaining an accuracy of around ~82% on the validation set. It should also be noted that even when training on 20000 images this model took less time per pass through of the data than the baseline took on the 5000 images validating the effectiveness of methods used to reduce training time.

Full Project can be Found at:

https://github.com/LiamWoodRoberts/Image_Classification_for_Galaxy_Morphologies